Paper Summary: Twitter Heron

This summary combines material from "Twitter Heron: Stream Processing at Scale" which appeared at Sigmod 15 and "Twitter Heron: Towards extensible streaming engines" paper which appeared in ICDE'17.

Heron is Twitter's stream processing engine. It replaced Apache Storm use at Twitter, and all production topologies inside Twitter now run on Heron. Heron is API-compatible with Storm, which made it easy for Storm users to migrate to Heron. Reading the two papers, I got the sense that the reason Heron was developed is to improve on the debugability, scalability, and manageability of Storm. While a lot of importance is attributed to performance when comparing systems, these features (debugability, scalability, and manageability) are often more important in real-world use.

Wasteful for scheduling resources at the datacenter. Storm assumes that every worker is homogenous. This results in inefficient utilization of allocated resources, and often leads to overprovisioning to match the memory needs of the worker with the highest requirements at all the other workers.

Nimbus-related problems. The Storm Nimbus scheduler does not support resource reservation and isolation at a granular level for Storm workers. Moreover, the Nimbus component is a single point of failure.

ZooKeeper-related gripes. Storm uses Zookeeper extensively to manage heartbeats from the workers and the supervisors. This use of Zookeeper limits the number of workers per topology, and the total number of topologies in a cluster, since ZooKeeper becomes the bottleneck at higher numbers.

Lack of backpressure. In Storm, the sender does not adopt to the speed/capacity of the receiver, instead the messages are dropped if the receiver can't handle them.

As in Storm, a Heron topology is a directed graph of spouts and bolts. (The spouts are sources of streaming input data, whereas the bolts perform computations on the streams they receive from spouts or other bolts.)

When a topology is submitted to Heron, the Resource Manager first determines how many containers should be allocated for the topology. The first container runs the Topology Master which is the process responsible for managing the topology throughout its existence. The remaining containers each run a Stream Manager, a Metrics Manager and a set of Heron Instances which are essentially spouts or bolts that run on their own JVM. The Stream Manager is the process responsible for routing tuples among Heron Instances. The Metrics Manager collects several metrics about the status of the processes in a container.

The Resource Manager then passes this allocation information to the Scheduler which is responsible for actually allocating the required resources from the underlying scheduling framework such as YARN or Aurora. The Scheduler is also responsible for starting all the Heron processes assigned to the container.

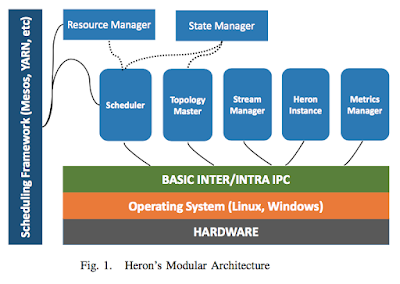

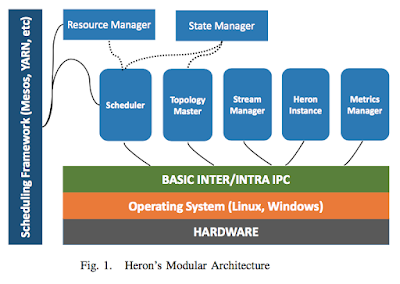

Heron is designed to be compositional, so it is possible to plug extensions into it and customize it. Heron allows the developer to create a new implementation for a specific Heron module (such as the scheduler, resource manager, etc) and plug it in the system without disrupting the remaining modules or the communication mechanisms between them. This modularity/compositionality is made a big deal, and the ICDE17 paper is dedicated entirely to this aspect of Heron design.

Note also that Heron's modular architecture also plays nice with the shared infrastructure at the datacenter. The provisioning of resources (e.g. for containers and even the Topology Master) is cleanly abstracted from the duties of the cluster manager.

Having a Topology Master per topology allows each topology to be managed independently of each other (and other systems in the underlying cluster). Also, failure of one topology (which can happen as user-defined code often gets run in the bolts) does not impact the other topologies.

The Stream Manager is another critical component of the system as it manages the routing of tuples among Heron Instances. Each Heron Instance connects to its local Stream Manager to send and receive tuples. All the Stream Managers in a topology connect between themselves to form n*n connections, where n is the number of containers of the topology. (I wonder if this quadratically growing number of connections would cause problems for very large n.)

As you can see in Figure 5, each Heron Instance is executing only a single task (e.g. running a spout or bolt), so it is easy to debug that instance by simply using tools like jstack and heap dump with that process. Since the metrics collection is granular, this makes it transparent as to which component of the topology is failing or slowing down. This small granularity design also provides resource isolation. Incidentally, this reminds me of the ideas argued in "Performance-clarity as a first-class design principle" paper.

Heron is Twitter's stream processing engine. It replaced Apache Storm use at Twitter, and all production topologies inside Twitter now run on Heron. Heron is API-compatible with Storm, which made it easy for Storm users to migrate to Heron. Reading the two papers, I got the sense that the reason Heron was developed is to improve on the debugability, scalability, and manageability of Storm. While a lot of importance is attributed to performance when comparing systems, these features (debugability, scalability, and manageability) are often more important in real-world use.

The gripes with Storm

Hard to debug. In Storm, each worker can run disparate tasks. Since logs from multiple tasks are written into a single file, it is hard to identify any errors or exceptions that are associated with a particular task. Moreover, a single host may run multiple worker processes, but each of them could belong to different topologies. Thus, when a topology misbehaves (due to load or faulty code or hardware) it is hard to determine the root-causes for the performance degradation.Wasteful for scheduling resources at the datacenter. Storm assumes that every worker is homogenous. This results in inefficient utilization of allocated resources, and often leads to overprovisioning to match the memory needs of the worker with the highest requirements at all the other workers.

Nimbus-related problems. The Storm Nimbus scheduler does not support resource reservation and isolation at a granular level for Storm workers. Moreover, the Nimbus component is a single point of failure.

ZooKeeper-related gripes. Storm uses Zookeeper extensively to manage heartbeats from the workers and the supervisors. This use of Zookeeper limits the number of workers per topology, and the total number of topologies in a cluster, since ZooKeeper becomes the bottleneck at higher numbers.

Lack of backpressure. In Storm, the sender does not adopt to the speed/capacity of the receiver, instead the messages are dropped if the receiver can't handle them.

Heron's modular architecture

As in Storm, a Heron topology is a directed graph of spouts and bolts. (The spouts are sources of streaming input data, whereas the bolts perform computations on the streams they receive from spouts or other bolts.)

When a topology is submitted to Heron, the Resource Manager first determines how many containers should be allocated for the topology. The first container runs the Topology Master which is the process responsible for managing the topology throughout its existence. The remaining containers each run a Stream Manager, a Metrics Manager and a set of Heron Instances which are essentially spouts or bolts that run on their own JVM. The Stream Manager is the process responsible for routing tuples among Heron Instances. The Metrics Manager collects several metrics about the status of the processes in a container.

The Resource Manager then passes this allocation information to the Scheduler which is responsible for actually allocating the required resources from the underlying scheduling framework such as YARN or Aurora. The Scheduler is also responsible for starting all the Heron processes assigned to the container.

Heron is designed to be compositional, so it is possible to plug extensions into it and customize it. Heron allows the developer to create a new implementation for a specific Heron module (such as the scheduler, resource manager, etc) and plug it in the system without disrupting the remaining modules or the communication mechanisms between them. This modularity/compositionality is made a big deal, and the ICDE17 paper is dedicated entirely to this aspect of Heron design.

Note also that Heron's modular architecture also plays nice with the shared infrastructure at the datacenter. The provisioning of resources (e.g. for containers and even the Topology Master) is cleanly abstracted from the duties of the cluster manager.

Heron Topology Architecture

Having a Topology Master per topology allows each topology to be managed independently of each other (and other systems in the underlying cluster). Also, failure of one topology (which can happen as user-defined code often gets run in the bolts) does not impact the other topologies.

The Stream Manager is another critical component of the system as it manages the routing of tuples among Heron Instances. Each Heron Instance connects to its local Stream Manager to send and receive tuples. All the Stream Managers in a topology connect between themselves to form n*n connections, where n is the number of containers of the topology. (I wonder if this quadratically growing number of connections would cause problems for very large n.)

As you can see in Figure 5, each Heron Instance is executing only a single task (e.g. running a spout or bolt), so it is easy to debug that instance by simply using tools like jstack and heap dump with that process. Since the metrics collection is granular, this makes it transparent as to which component of the topology is failing or slowing down. This small granularity design also provides resource isolation. Incidentally, this reminds me of the ideas argued in "Performance-clarity as a first-class design principle" paper.

Comments